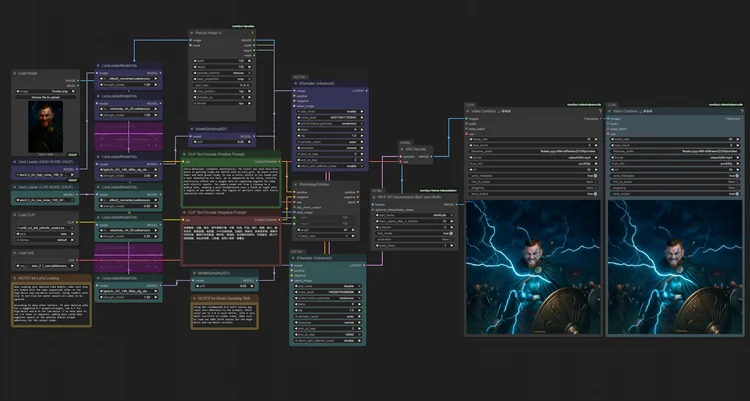

AI computing is standing at a critical crossroads. With the rapid evolution of Transformer models, real-time generative AI, and multimodal systems, workloads are becoming increasingly “hungry,” pushing existing hardware to its performance limits. For most users, generative AI (AIGC) is the easiest way to experience the power of artificial intelligence — from text-to-image to video generation to AI avatars.

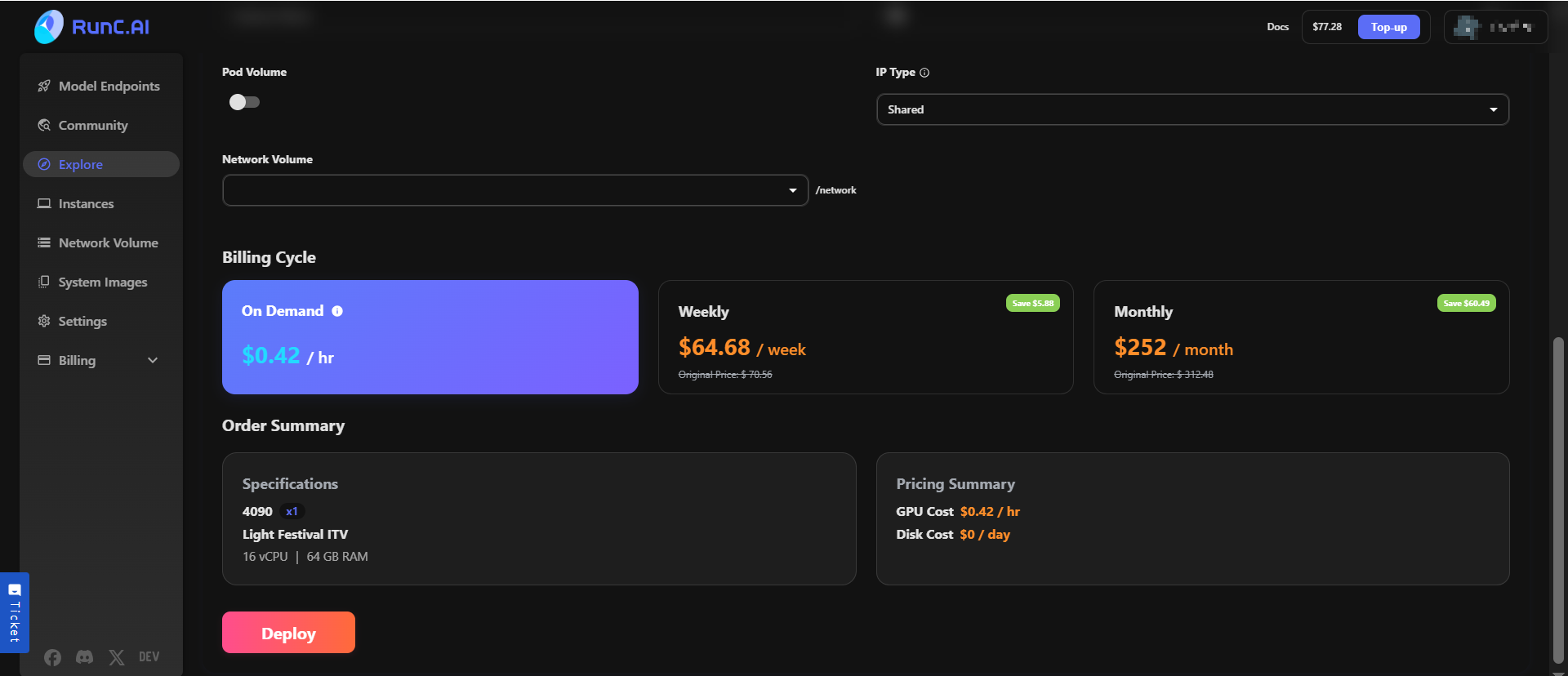

However, the skyrocketing price of GPUs has created a barrier for many AI enthusiasts and indie developers. This is where GPU cloud rental becomes the smart alternative. On platforms like RunC.AI, you can access an RTX 4090 for less than $0.42/hour, making cutting-edge AI development more accessible than ever.

Why RTX 4090 Is the Sweet Spot for AIGC?

For AI developers and hobbyists, the RTX 4090 represents a perfect balance of price, performance, and efficiency. It’s powerful enough to run most state-of-the-art AIGC workloads, while still being affordable compared to enterprise-grade GPUs like A100 or H100. Below is the specifications of NVIDIA GeForce RTX 4090.

| Specifications | NVIDIA GeForce RTX 4090 |

|---|---|

| Architecture | Ada Lovelace |

| Transistor Count | 76.3 billion |

| CUDA Cores | 16,384 |

| Shader Performance | 83 TFLOPS |

| Tensor Cores | 4th Gen, 1,321 AI TOPS |

| Ray Tracing | 3rd Gen, 191 TFLOPS |

| Clock Speed | 2.23 GHz / 2.52 GHz |

| DLSS Support | DLSS 3 / 3.5 |

| Memory Capacity | 24 GB GDDR6X |

| Memory Bus Width | 384-bit |

| Memory Bandwidth | 1 TB/s |

| Power Consumption | 450W (TDP) |

| MSRP | $1,800 |

Benchmark: RTX 4090 in AI Workloads

To measure real-world performance, we ran multiple LLaMA benchmark tests, using different configurations. The key metric was tokens/sec, simulating real-world inference workloads.Results show that the RTX 4090 delivers up to 4–5x higher throughput compared to consumer GPUs like RTX 3080, while costing significantly less than enterprise-grade GPUs in cloud environments.

| Models | RTX 4090 (Tokens/sec) |

|---|---|

| LLaMA 3.1 8B - Q4(Test A) | 126 |

| LLaMA 3.1 8B - Q4(Test B) | 95 |

| LLaMA 3.1 8B - Q4(Test C) | 108 |

| LLaMA 3.1 8B - Instruct(FP16) | 53 |

| LLaMA 3.1 8B - Instruct(Q8) | 87 |

| LLaMA 3.2 3B - Q4 | 218 |

| LLaMA 3.2 1B - Q4 | 338 |

| LLaMA 3.2 3B - Instruct(FP16) | 108 |

| LLaMA 3.2 1B - Instruct(FP16) | 239 |

VRAM Requirements for AIGC Models

One of the biggest bottlenecks in AI is VRAM capacity. The RTX 4090’s 24GB VRAM makes it flexible enough to handle most scenarios without model sharding or aggressive quantization.

| Model Type | Typical VRAM Requirement | Fits on RTX 4090? |

|---|---|---|

| Stable Diffusion 1.5 | 8–12 GB | ✅ |

| Stable Diffusion XL | 18–20 GB | ✅ |

| Hunyuan | 16GB - 24GB+ | ✅ |

| Hidream | 8GB - 24GB+ | ✅ |

| Flux Kontext | 12GB - 24GB | ✅ |

| Wan | 16GB - 24GB+ | ✅ |

| LLaMA-7B | ~15 GB | ✅ |

| LLaMA-13B | ~22 GB | ✅ (with quantization for efficiency) |

| LLaMA-70B | 40+ GB | ❌ (requires A100/H100) |

This shows that the RTX 4090 covers most common AI workloads used by indie developers, researchers, and AIGC creators.

Cost of Image Generation on RTX 4090 vs. Alternatives

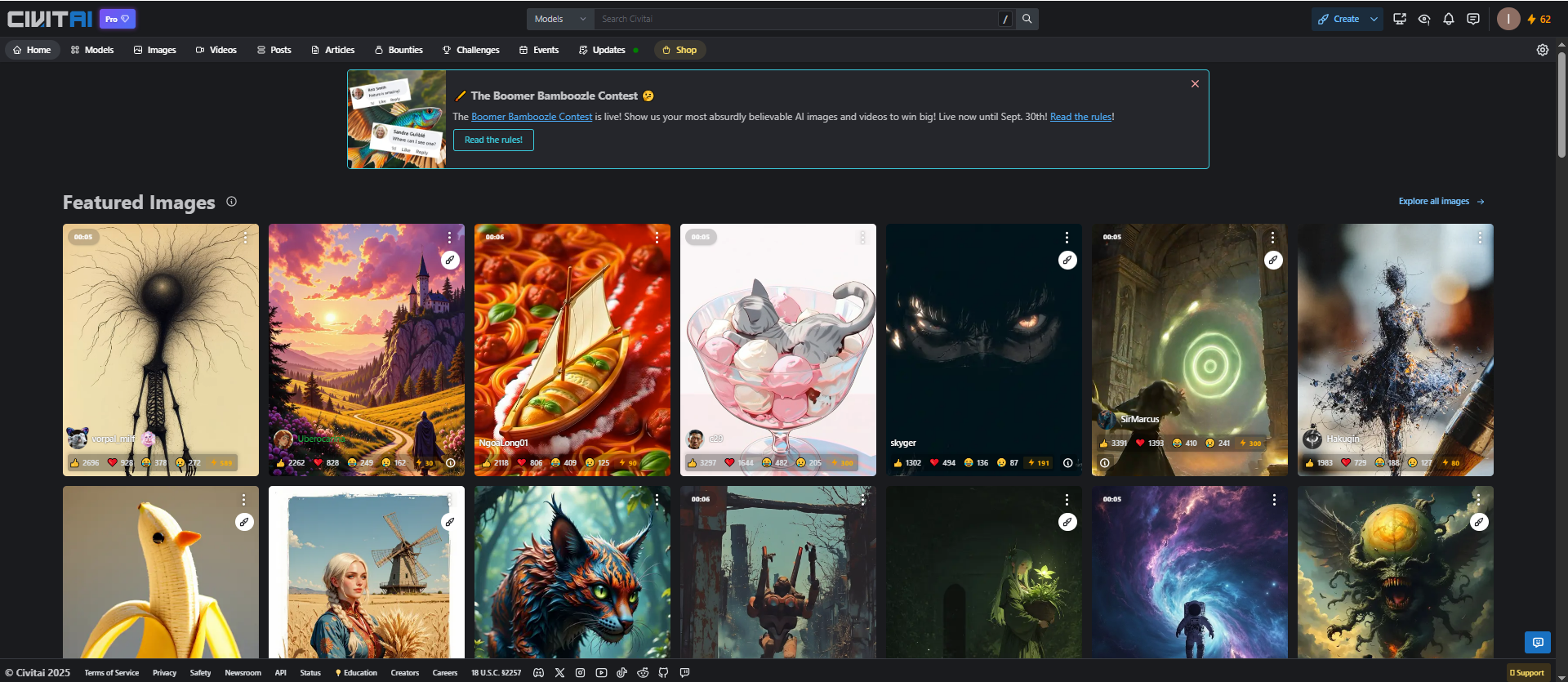

Another way to evaluate efficiency is by looking at the cost per generated image across different platforms.

| Platform | GPU Used | Cost per Image | Notes |

|---|---|---|---|

| RunC.AI(4090 rental) | RTX 4090 | ~$0.001 – $0.002 | $0.42/hr |

| Civitai | Cloud GPUs | $0.04 – $0.08 | Buzz-based credits |

| OpenArt | Cloud GPUs | $0.0037 – $0.11 | Credit-based pricing |

| SeaArt | Cloud GPUs | ~$0.002 – $0.008 | Very cheap but limited control |

How to run RTX 4090 at a very low price?

1 * RTX 4090 = $0.42/hr

2 * RTX 4090 = $0.84/hr

4 * RTX 4090 = $1.68/hr

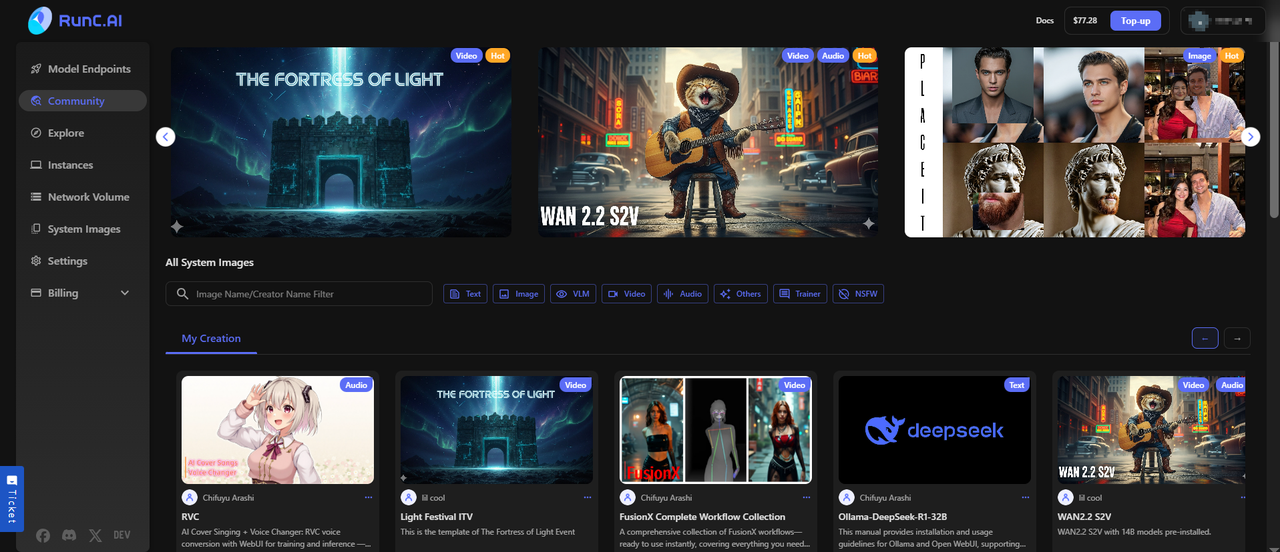

Step1:Register an account

Sign up on the RunC.AI

Step2:Exploring Templates and GPU Servers

Pick your favorite container image from pod to VM.

Step3:Launch an instance

Deploy the instance and enjoy

The future of AI belongs to those who can experiment quickly and scale affordably. For AIGC creators, developers, and researchers, the RTX 4090 on RunC.AI is currently the most cost-effective solution to unlock real generative power.Instead of worrying about hardware investment, you can focus on what truly matters: building, creating, and innovating with AI.

Ready to try? You can start generating with RTX 4090 from just $0.42/hour on RunC.AI.