Welcome everybody, to another RunC.AI tutorial. This time we will still be playing with DeepSeek, except we are going to use the Ubuntu system image. Now let us start this tutorial.

First and foremost, login to your account as always and click deploy. Scroll down to Image and click System image, this time we will be using Ubuntu.

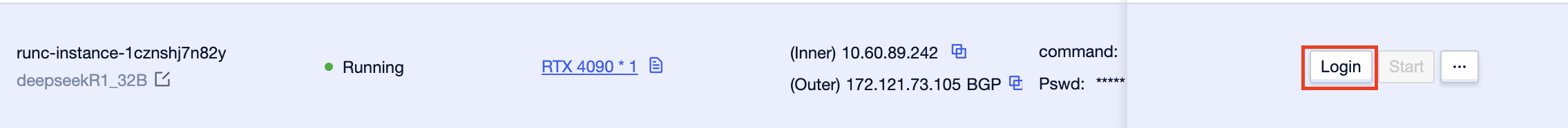

Then you will click the login button on the right

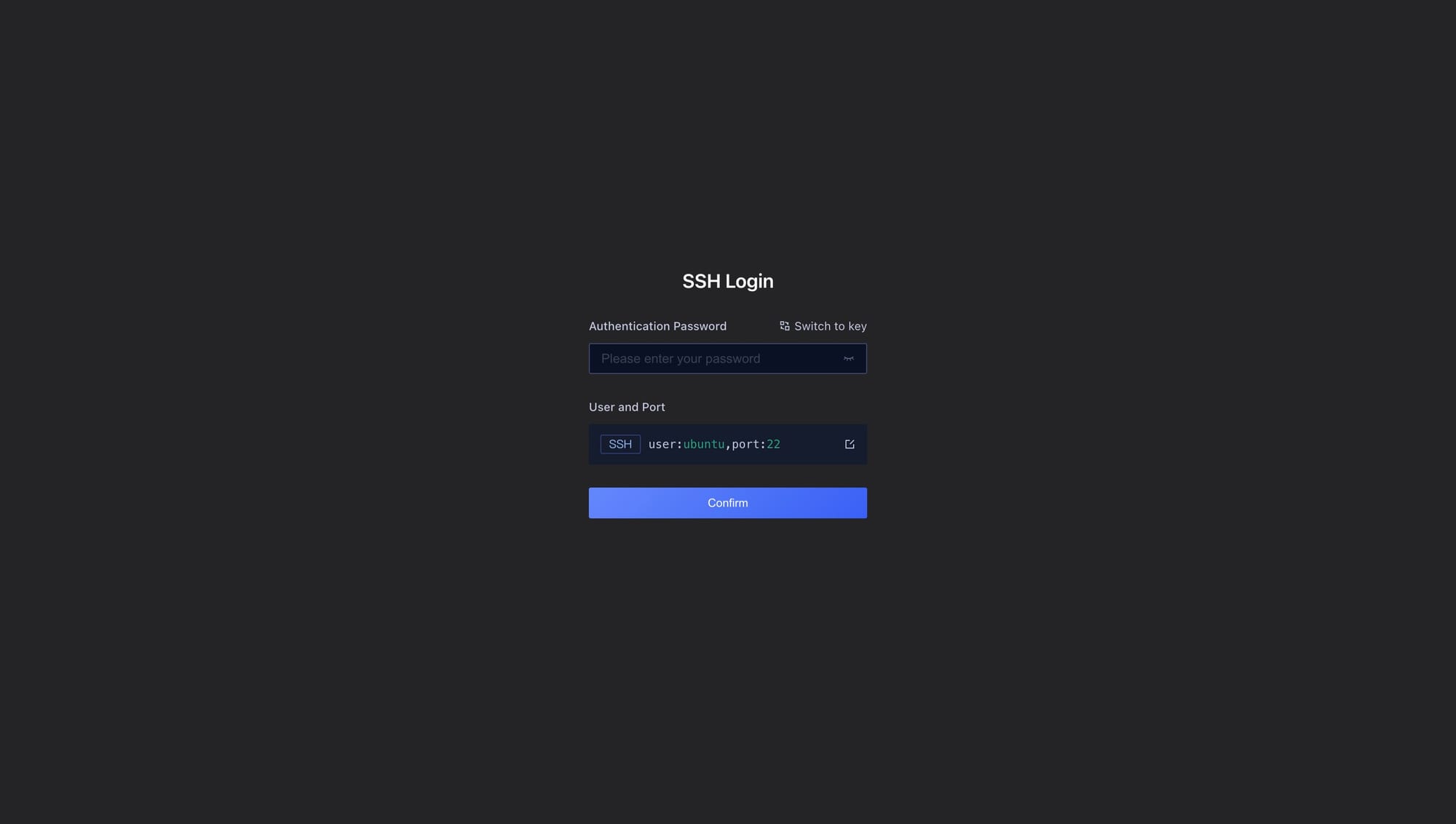

You will then see a page where you need to enter the password

You can find your password here

Deploy Ollama

Once you get in the Ubuntu terminal, type in the following command to install ollama.

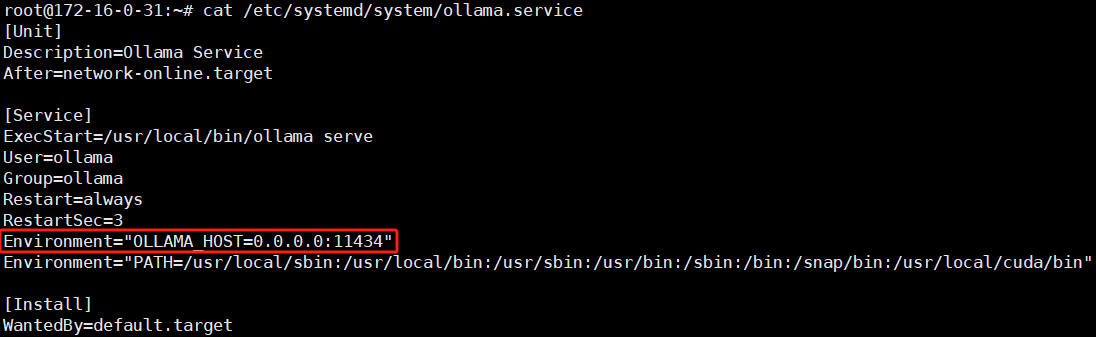

curl -fsSL https://ollama.com/install.sh | shBy default, after the installation is completed, there will be an ollama.service file. In order to enable the local host and Docker containers to communicate with each other, the Environment variable needs to be modified to "OLLAMA_HOST=0.0.0.0:11434"

sudo vim /etc/systemd/system/ollama.service

Now we need to restart Ollama

sudo systemctl daemon-reload

sudo systemctl restart ollamaNow we can pull the DeepSeek-R1 Model

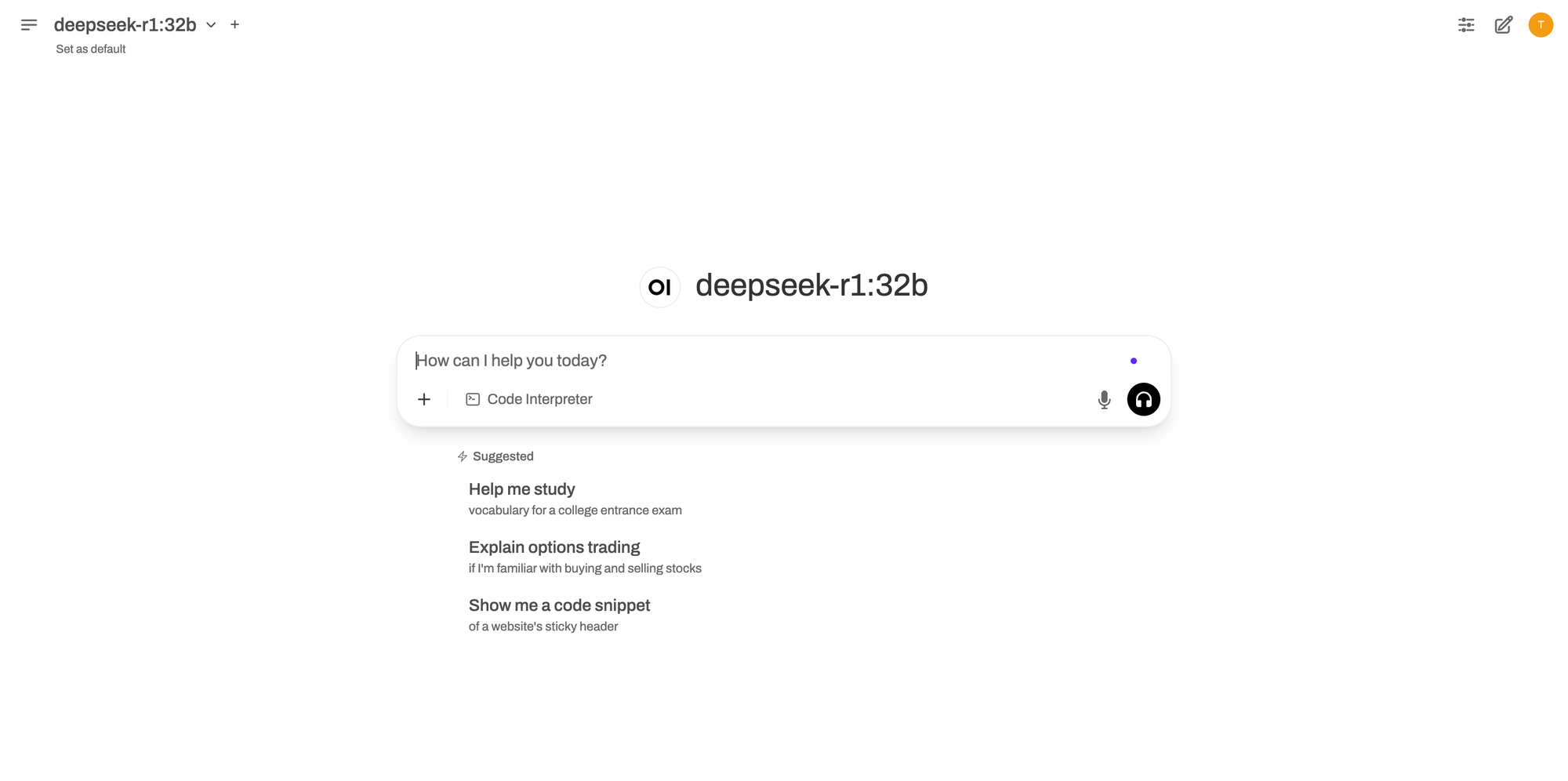

ollama run deepseek-r1:32bOpen-WebUI

Now we need to pull the Open-WebUI Image

First, follow the Nvidia official website to download and config Nvidia CUDA container toolkit.

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html

Then type in the following command

sudo docker pull ghcr.io/open-webui/open-webui:cudaIn order to enable webui within the container to communicate with Ollama on the external host, it is necessary to allow the Docker container to directly use the host network

docker run -d --network=host \

-v open-webui:/app/backend/data \

-e OLLAMA_BASE_URL=http://127.0.0.1:11434 \

--name open-webui --restart always \

ghcr.io/open-webui/open-webui:cudaTo get access to Open WebUI, visit http://IP:8080 where "IP" is your IP address which you can find in the following picture

Now, you can ask deepseek any question you want.

About RunC.AI

Rent smart, run fast. RunC.AI allows users to gain access to a wide selection of scalable, high-performance GPU instances and clusters at competitive prices compared to major cloud providers like Amazon Web Services (AWS), Google Cloud, and Microsoft Azure.